How drones learn to fly autonomously

Hums and buzzes fill the air. But the things that are buzzing here are not bees and bumblebees, but machines. Small, agile drones have become part and parcel of our world, providing not only breathtaking aerial views or, as swarms and equipped with lights, creating impressive three-dimensional images in the sky. Drones also help to inspect critical infrastructure or survey large areas of land for nature conservation purposes.

For the time being, however, these machines are fragile and their flight is unstable. The tiny onboard computer has to constantly correct their course and merely brushing an obstacle will often end in a crash.

Jointly with Stephan Weiss, Jan Steinbrener heads the Control of Networked Systems research group at the University of Klagenfurt. Their aim is to teach drones to fly autonomously, meaning that drones would no longer need to rely on external input such as GPS data to determine their position. This is particularly important in situations and environments where drones are no longer able to receive a GPS signal.

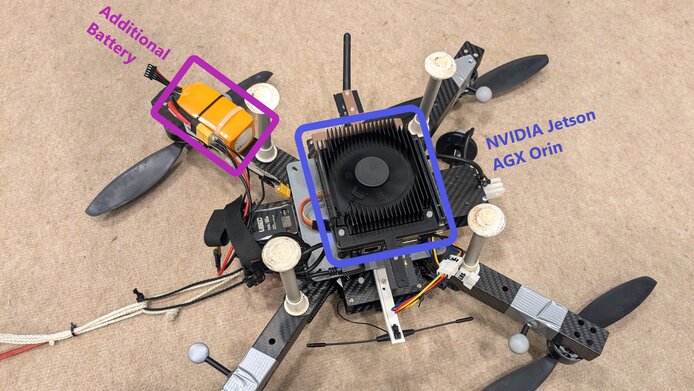

“A drone’s weight and, thus, its available energy and computing power are very limited,” explains Steinbrener. “This makes working with them a particular challenge.” Entitled “Learning to fly live”, Steinbrener's research project is funded by the FWF under its 1000 Ideas Program which promotes innovative research approaches. The title aptly describes his approach, as he takes inspiration from the way in which living creatures control their own bodies and navigate through space.

AI navigation

“In the midst of complex obstacles, GPS signals do not provide sufficient accuracy to enable drones to navigate,” says Steinbrener. “A drone must therefore be able to auto-navigate.” One way for the flying machines to do this is by analyzing the images from their built-in camera in order to calculate their own position and rotation in space. The researcher about the problem with this approach: “In order to be able to fly and navigate stably, the drone ideally needs to calculate its own position a hundred times per second, but the small computer on board with its limited computing power is unable to process the camera images adequately.”

Instead, Steinbrener and his team harness data from another sensor in the drone, which signals whenever there is a change in position or rotation. This acceleration sensor provides a lot of data, but they are imprecise.

“We use artificial intelligence to bring together these two data streams,” notes Steinbrener. The computer on the drone manages to evaluate the images from the camera around ten times per second. In the intervals, the new AI algorithms help to control the drone based on the less accurate data from the acceleration sensor. In addition, the algorithms can help to analyze camera images that are too dark or blurred. “This approach of using different sensor data has been around for a long time, but we are expanding it by means of artificial intelligence.” For their training, AI algorithms need data. In this respect, too, Steinbrener and his team have chosen an innovative approach.

Learning by hovering

Instead of the usual method of training the algorithms on a powerful computer with previously recorded data, the researchers chose an approach inspired by living beings. “An infant only gradually learns how to use its own body and find its way in the world,” says Steinbrener. “In a similar way, we let the algorithms on the drone learn step by step how they can help with navigation.”

Initially, the researchers tested simple hovering without any major movements by the drone. The algorithms on the drone used its acceleration sensor data to gradually improve flight stability. This experiment was also the easiest to carry out several times, as the drone could be secured against crashing by means of a tether while hovering. “Drones are fragile and crashing can easily destroy them. And that can happen quickly if the algorithm hasn't reached peak performance,” smiles Steinbrener. “Working with such algorithms is always somewhat empirical. You come up with an idea, and then you just have to try it out.”

The hovering tests were successful and gave the researchers insights into which types of AI algorithms work best for this. As a next step, they plan to stabilize more complicated flight maneuvers using AI algorithms. They also intend to generalize their approach so that their software also works with drone models and sensors other than those they tested.

Steinbrener has a great number of future applications in mind: “Drones that can navigate autonomously and without external signals could independently inspect critical infrastructure such as bridges or electric pylons that are otherwise difficult to reach, or survey situations that are hazardous for humans. They could even autonomously explore other planets where obviously no GPS for navigation exists.”

Personal details

Jan Steinbrener studied physics in Würzburg and the USA, where he completed his doctorate on the subject of X-ray microscopy. His career then took him to the Max Planck Institute for Medical Research in Heidelberg and to Carinthian Tech Research AG (CTR) in Villach. As of 2019, he has been conducting research at the Institute for Intelligent System Technologies at the University of Klagenfurt, where he was also appointed Vice Rector for Research and International Affairs in December 2024.

His research project “Learning to fly live” (2021–2024) received EUR 153,000 in funding from the Austrian Science Fund FWF under its 1000 Ideas Program, which funds particularly innovative approaches in the sciences.

Publications

Jantos T., Scheiber M., Brommer C. et al: AIVIO: Closed-Loop, Object-Relative Navigation of UAVs With AI-Aided Visual Inertial Odometry, in: IEEE Robotics and Automation Letters 2024

Singh R., Steinbrener J., Weiss S.: Deterministic Framework based Structured Learning for Quadrotors, IEEE Conference Proceeding Abstract 2023