Finding the best wrong models

“All models are wrong, but some are useful.” For Gregor Kastner, this quote by the British statistician George E. P. Box summarizes an important aspect of his own research. A Professor of Statistics at the University of Klagenfurt, Kastner is in charge of the FWF's interdisciplinary Zukunftskolleg (Young Independent Researchers Group) entitled “High-dimensional statistical learning: new methods to advance economic and sustainability policies”. In this context, he collaborates with economists, computer scientists and sustainability researchers. The project, which started in 2019, includes his team at the University of Klagenfurt and researchers at the University of Salzburg, the Austrian Institute of Economic Research (WIFO) and TU Wien

The project aims at fundamentally improving the statistical models that are used for predictions in a multiplicity of economic fields. This is how Kastner elucidates on the opening quote: “Models can never represent reality in its entirety. They can only be as good a fit as possible for the intended application and thus furnish predictions and probabilities that help us make decisions.”

The curse of dimensionality

Put in simple terms, statistical models can be thought of as a collection of mathematical formulas. If they have been put together well, they can be fed data in the form of figures and, on this basis, produce predictions about the future and indicate how likely these are. There are models, for instance, that try to predict how a country's economic growth will change as a result of a change in the prime interest rate. In this example, it is not only the value of the prime interest rate that plays a role, but also a number of other economic factors that the model must take into account in the best possible and most sensible manner.

A statistical model of this kind can be constructed in many different ways. It must be trained and tested using real-world data. A simple indicator of a model’s complexity is the number of parameters it includes. In the example above, the influence of the prime interest rate is just one of the parameters, and many other aspects such as the unemployment rate, the productivity of various economic sectors, or cyclical events, also come into play. In order to make the most accurate predictions possible, a model needs a great many more parameters. Given the complexity of the data, a model can include tens to hundreds of thousands of parameters.

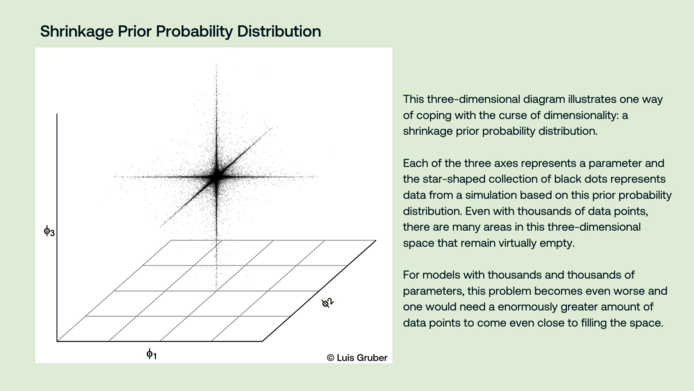

“The curse of dimensionality is the problem here,” notes Kastner. “In simple terms, the dimensionality of a model is the number of parameters. The curse here is a consequence of the fact that, with too many parameters, one quickly comes to a point when the data available are insufficient to serve as the basis for a good model.” If data are scarce for too many parameters, the model tends simply to reproduce the existing data in an over-accurate manner for its predictions. “As a result, the model cannot represent the underlying trends and correlations and produces distorted, inaccurate or over-precise statements about the future,” explains Kastner.

Gregor Kastner is Professor of Statistics and Head of the Institute of Statistics at the University of Klagenfurt.

More about the project: https://zk35.org/

Models for anything from the financial sector to location data

Eliminating this curse of dimensionality is a central focus in Kastner's project. “We have modelled macroeconomic data and share price volatility in the financial sector, looked at satellite data on agricultural land use and its correlation with EU subsidies, and investigated how to model and predict people and traffic densities using anonymized cell phone activity,” says Kastner, delineating some of the fields of application of high-dimensional statistical models he has explored.

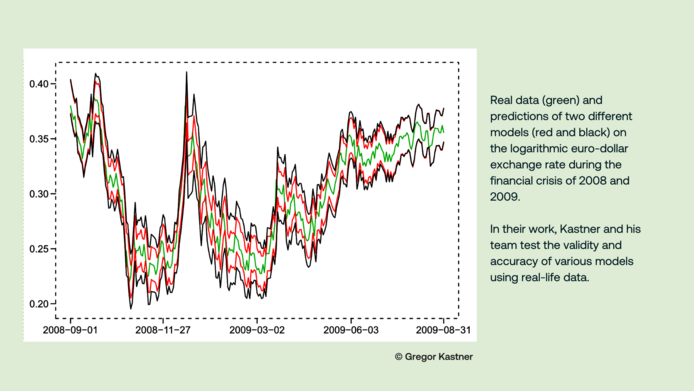

In their work, the researchers were less interested in producing tangible recommendations for political decisions than in increasing the robustness of the underlying models. “We use various approaches to improve the validity of the models, in particular methods from Bayesian econometrics, which has only become possible in recent decades thanks to the enormous increase in computing power,” notes Kastner. “In this way, we want to provide a stable basis for statistical models that can be used in many areas – including machine learning, currently hotly debated, which in many cases is still error-prone.”

Looking back and forward

The research project is set to run until mid-2024, and Kastner is happy to review the successful collaboration: “There were a few challenges to overcome, particularly communication between the various specialist disciplines. Some terms simply mean different things in two different areas. In general terms, however, the project was a great success. Not only did we advance science, but the funding also enabled us to finance the work of numerous researchers to make progress in their field.” Most of the young scientists who originally submitted the project found permanent positions in science – two of them were even appointed professors.

“FWF funding has played an essential role in all this. The future will show which of the results will become applicable in the long term,” Kastner concludes. “The search for truth in models will definitely continue to be part of my work.”

Personal details

Gregor Kastner is Professor of Statistics and Head of the Institute of Statistics at the University of Klagenfurt. After studies in technical mathematics, computer science and sports and a period of teaching at a school, he took up Bayesian econometrics in 2010 and acquired his doctorate in 2014. In 2020 he was appointed professor at the University of Klagenfurt. and he is pleased to be able to pursue his passion for statistics, especially Bayesian modeling, and to guide and support other researchers in their work. The project High-Dimensional Statistical Learning: New Methods for Economic and Sustainability Policy (2019-2024) was funded by the Austrian Science Fund FWF with around two million euros as part of the “Zukunftskollegs” program to promote innovative and interdisciplinary cooperation between postdoctoral teams.

Publications

Feldkircher M., Gruber L., Huber F., Kastner G.: Sophisticated and small versus simple and sizeable: When does it pay off to introduce drifting coefficients in Bayesian vector autoregressions? Journal of Forecasting 2024

Vana L., Visconti E., Nenzi L., Cadonna A., Kastner G.: Bayesian Machine Learning meets Formal Methods: An application to spatio-temporal data. arXiv pre-print 2024

Gruber L., Kastner G.: Forecasting macroeconomic data with Bayesian VARs: Sparse or dense? It depends! arXiv pre-print 2023

Mozdzen A., Cremaschi A., Cadonna A. et al: Bayesian modeling and clustering for spatio-temporal areal data: An application to Italian unemployment. Spatial Statistics, Vol. 52, 2022