Making gaming hardware fit for new tasks

The rapid growth of the video game industry, whose sales figures have long since attained the level of that of the global movie industry, has started to have an impact far beyond the field of entertainment, exerting a crucial influence on the computer sector. This is particularly evident in the realm of hardware, where graphics cards optimised for video games, so-called “GPUs” (graphics processing units), have encroached on completely new areas. In the wake of the bitcoin hype, GPUs became scarce on the market, because thousands of them were used for bitcoin “mining”. The comparatively inexpensive GPUs have also been used for years in the arena of supercomputers because manufacturers were looking for an edge in the race for the world's most powerful computer. Although a GPU’s performance potential is theoretically high, their distinct specialisation makes things more difficult, because they are optimised for the very specific computing operations required in graphics processing. The architecture of these systems has remained practically unchanged for 15 years. In a project supported by the Austrian Science Fund FWF, Dieter Schmalstieg from Graz University of Technology is now exploring how GPUs could be made more flexible without losing the advantage of high computing power.

Parallel processing

“In the 1980s and 90s, computer games were mainly for children. In the meantime, gaming has become a large industry which has produced GPUs that are currently the cheapest and most powerful hardware in the world,” says Dieter Schmalstieg. “This is how we developed the idea that they could be used for other applications as well.” According to Schmalstieg, the “parallelisability” of algorithms is the key factor in this context: what is the best way of breaking down a task into individual sub-tasks that can be processed in parallel? The hallmark of GPUs is their high number of processing cores. While a PC processor currently typically has eight individual cores, new GPUs have more than 5,000 cores that can work in parallel, but they are not particularly flexible. “As a rule, GPUs apply a single command to huge amounts of data.” So far, anything that changes this principle has been at the expense of efficiency, which means the benefits have been cancelled out. Schmalstieg and his team are looking to add new, more flexible elements to the ready-made software tools that are optimized for video games, without losing efficiency. One angle of attack includes better use of memory. “The problem is a classic in computer science,” Schmalstieg says. There are several types of memory: fast, small cache memory, a mid-level of larger, less fast memories, and finally the main memory, which is large but relatively slow. "Normally, memory allocation is taken care of by the manufacturer. We are looking for ways to give developer teams more control over it,” explains Schmalstieg. Developer teams will also have greater influence on the distribution of computing tasks among the different cores, which will further increase efficiency. The scientist compares his approach with the “hacking” of hardware, because the manufacturing companies are reluctant to open up their developments, and a great deal is unknown. “This is about trade secrets.” There are two goals: using all of the potential of current hardware, but also developing concepts that could be useful for future generations of hardware.

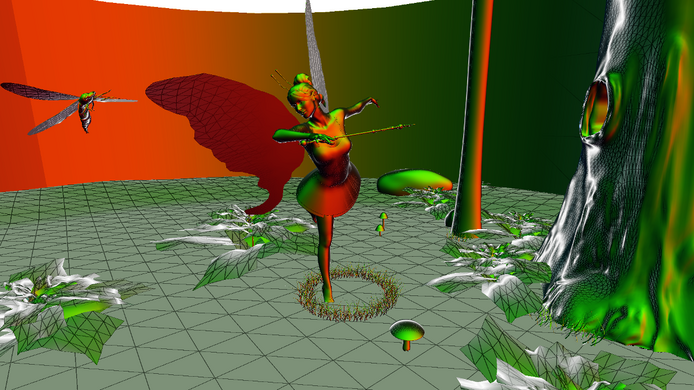

Making GPUs fit for virtual reality

Schmalstieg is particularly interested in graphics applications that involve “latency”, which is relevant for applications that need to respond in real time and fast enough for users not to notice the delay. This is crucial in virtual reality (VR), for example, where users may feel nauseous when latency times are too long. According to Schmalstieg, current GPUs are not optimised for some of the special requirements of virtual-reality systems: “With VR systems, each eye sees a separate image with only slight modifications between the two. A great deal could be saved by exploiting these similarities.” According to Schmalstieg, this is not a new idea, but the methods he has developed could improve the results. “Up to now, the image has to be completely recalculated for each eye. We have created a prototype that shows how this can be done more efficiently,” says the researcher. Virtual reality glasses bring the image to the eye via a lens that distorts the actual screen. Until now, a flat image had to be calculated and subsequently distorted. Again, this can be simplified with Schmalstieg’s more flexible tools by calculating the distorted image directly.

GPUs for supercomputers

Dieter Schmalstieg’s work is based on the CUDA language of the GPU manufacturer Nvidia. He uses it to develop software tools that can then be used by other developers working in a high-level language such as C++, as a quasi-interface between hardware and software developers. Although this basic research project deals with prototypes, the programmes run in real time, as the researcher emphasises. While Schmalstieg’s research mainly concerns graphics, his project partner Markus Steinberger is interested in using GPUs in supercomputers. Current supercomputers often have potentially astronomical computing power thanks to the use of GPUs, but cannot exploit it because of a lack of flexibility. What is relevant in this case is not latency but only the best possible utilisation of all GPU cores. This is another example of a more flexible software tool creating great benefits. The project succeeded in showing how the computing power of modern supercomputers that use GPUs can be better exploited. Schmalstieg notes that the industry is not yet fully persuaded of the benefits, but he perceives great potential. As he explains, “there is not as much standard software used in this field, which could mean that there are fewer reservations about using alternative development environments. We've provided proof of the potential benefits that could be reaped.”

Personal details Dieter Schmalstieg conducts research at the Institute for Computer Graphics and Vision at Graz University of Technology. The computer scientist is interested in augmented reality, virtual reality, computer graphics, as well as visualisation and human-computer interaction.

Publications