Conspiracy theories on the digital circuit

Condensation trails full of mind-bending chemicals, an Earth that is hollow and inhabited by lizard people or the rich who can stay eternally young with children's blood – what may sound like the plots of trashy Hollywood movies now appears to more and more people as the most plausible explanation for an increasingly complex and threatening world.

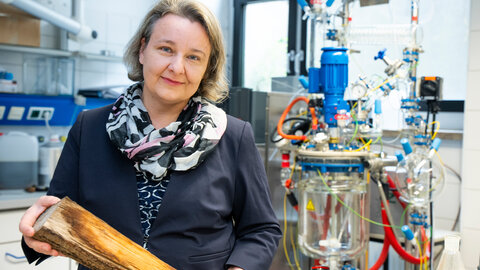

Alongside fake news, which spreads like wildfire via social media, conspiracy theories represent a veritable menace for our liberal democracies. The work of Jana Lasser, a complexity researcher from the Graz University of Technology, is helping to develop a scientific basis for potential solutions.

While previously focused on fake news, Lasser now wants to analyze huge amounts of data from social networks in order to identify the root causes for the appeal of conspiracy theories. In this interview, Lasser talks about her new project, for which she was recently awarded the "netidee SCIENCE" award from Internet Stiftung and the Austrian Science Fund FWF.

Personal details

Jana Lasser studied physics in Germany and completed her doctorate on the physics of complex systems. After her thesis, she turned to complexity research, where she uses data science methods to find answers to socially relevant questions. Following postdoc positions in Göttingen and at the Complexity Science Hub in Vienna, Lasser completed her professorial qualification this year at Graz University of Technology on the computer-aided modelling of complex social systems.

Myths about conspiring forces and fake news have probably been around for as long as human civilization. Why is it that fake news, disinformation and conspiracy theories have just recently become a problem?

Jana Lasser: I think that two things are mainly to blame. One is the social aspect: we live in societies with great inequalities. People whose lives are fraught with serious problems are accordingly more likely to buy into explanations that challenge the current system.

The second aspect is the wide prevalence of digitalization and the associated potential for the dissemination of fake news and conspiracy theories. Social media, which optimize their recommendation algorithms on the basis of interactions, constitute a problem. Emotional content, for instance, generates attention, which is why such content spreads particularly well – a mechanism also exploited by fake news.

It's a fact that disinformation is more widespread than ever before. What consequences can the flood of fake news have for our coexistence?

Lasser: Ultimately, there is a risk that our democratic societies will no longer be able to survive. If we, as citizens, can't agree on how to solve problems together because we're moving to some extent in different information worlds, democratic institutions will collapse.

If, for instance, people doubt the legitimacy of election results and thereby also challenge the authority of decison-makers, our social order ceases to function. These are the dangers that motivate my research.

Disinformation, fake news, conspiracy myths – in everyday life we often use these terms interchangeably. But how do scientists define these terms?

Lasser: Disinformation refers to false information that is deliberately spread with the intention of manipulating people. This element of intent represents the difference to misinformation: if relatives send me a hoax item from a newspaper, this is not disinformation, as they probably were not out to manipulate me.

Once disinformation is presented in a professional way, we are talking about fake news. There are websites that studiously copy the style of serious news sites, including colorful article tiles and quotes from completely fictitious sources and authors.

Conspiracy theories go beyond this. It is a characteristic of such myths that they blame an unidentified elite for negative developments. In addition, conspiracy myths contain claims that cannot be scientifically disproved, and this makes it particularly difficult to counter such statements. But conspiracy theories also use fake news to underpin their narratives.

At first glance, fake news is difficult to distinguish from real news. Nevertheless, this is precisely what you had to do for your research over and over again. How do you do that?

Lasser: Certainly not by checking the truthfulness of every single news item. You do it by analyzing its origin. This involved using service providers that evaluate media according to journalistic criteria such as these: does a given news agency consistently distinguish between news items and opinion, is it always clear who wrote an article, what is the agency’s ownership structure, and are error correction processes available? The right answers will result in a good rating.

We investigated how often politicians share links to websites that meet this catalogue of criteria. One of the things we found was a massive decline in the last six years in the trustworthiness of the pages to which US Republican politicians share links. In addition, the texts accompanying the links increasingly used language patterns aiming at emotion rather than presenting verifiable facts.

You have just received the “netidee SCIENCE” grant for your new project on conspiracy theories. What is this project about?

Lasser: We want to understand which factors contribute to conspiracy theories becoming popular. We have a number of hypotheses about this: the emotionally charged language, but also the fact that conspiracy theories try to explain a complex world with simplistic answers.

We will also analyze economic aspects, because the spread of conspiracy myths can also be linked to financial interests. Some people made a lot of money by either selling merchandise or arranging transportation by bus during the corona protests, for example.

Popularity is difficult to measure. How will you test these hypotheses?

Lasser: We have a huge dataset of public group chats that were collected on the Telegram platform in German-speaking countries during the coronavirus pandemic. In these texts, we can trace which conspiracy theory became popular at what point by identifying words that are typical of individual conspiracy myths.

For example, the word “adrenochrome” is found only in the context of a theory which claims that politicians drink children's blood. If we count how often this word appears in the chats in a given time slot, we can estimate the popularity of a conspiracy theory in this set of messages. With the help of the tools that Mathias Angermaier developed as part of his master's thesis with us, this works automatically for large amounts of data.

Telegram is just a messaging service. Other social media, on the other hand, use a recommendation algorithm to publish content on users' timelines. Does this difference affect the spreading of conspiracy theories?

Lasser: In order to understand this, we will compare the spread of a theory on Telegram with its popularity on right-wing Twitter clones, i.e. on social media that are essentially copies of Twitter. Unlike Telegram, however, these sites do have algorithmic moderation. It is our hypothesis that conspiracy theories will grow faster there.

Previous strategies against conspiracy theories and disinformation have focused on fact-checking – with very limited success. Why is that?

Lasser: Fact checks don't work for several reasons, first of all because of the time lag: in the fast-paced world of social media, an item of content gets most attention in the first 24 hours after going online. Fact-checking, on the other hand, usually needs two to three days before publishing. By that time, however, attention has already shifted somewhere else.

The second problem is the so-called backfiring effect: fact checks are perceived as attempts by the “other side” to further their agenda, which gives more credibility to the disinformation or conspiracy theory. So you see, earlier strategies have not been very successful. However, building on our research, new approaches could emerge to curb the spread of conspiracy myths and fake news.

One strategy would be to simply remove dubious content. What do you think of that?

Lasser: It's not about what content should be deleted, but about moderating attention. Content that contributes to jeopardizing our civil discourse, for example, shouldn't get so much scope. But this is all about finding the right balance between democratic values and civil discourse on the one side and freedom of expression on the other.

Currently, the moderation is steered by the profit interests of platforms that want to maximize attention. In my view, social media have become so important for the public exchange of information that we as a society should consider a different route. However, research alone cannot decide how we handle the balance between freedom of expression and civil discourse. We must find a social consensus.

About the project

Conspiracy theories are becoming increasingly popular – with potentially disastrous consequences for our democratic societies. In order to curb their influence, we need to understand the social, psychological and economic factors that make conspiracy myths so successful. These are the central issues in the project of complexity researcher Jana Lasser. Screening huge amounts of data, she extracts the factors that can explain the appeal of conspiracy myths. In addition, she intends to take into account technological differences between various platforms. In this way, Lasser hopes to find new strategies to combat the spreading of conspiracy theories.